Table of Contents

1 | Introduction to Design Tokens

2 | Managing and Exporting Design Tokens With Style Dictionary

3 | Exporting Design Tokens From Figma With Style Dictionary

4 | Consuming Design Tokens From Style Dictionary Across Platform-Specific Applications

5 | Generating Design Token Theme Shades With Style Dictionary

6 | Documenting Design Tokens With Docusaurus

7 | Integrating Design Tokens With Tailwind

8 | Transferring High Fidelity From a Design File to Style Dictionary

9 | Scoring Design Tokens Adoption With OCLIF and PostCSS

10 | Bootstrap UI Components With Design Tokens And Headless UI

11 | Linting Design Tokens With Stylelint

12 | Stitching Styles to a Headless UI Using Design Tokens and Twind

What You’re Getting Into

In the previous article, I demonstrated how we can create an automated process to generate design tokens from a design file using a plugin and export them as platform deliverables using Style Dictionary and GitHub Actions.

I had mentioned a fictional scenario where we work for ZapShoes, an e-commerce application that ships shoes in a zap, and have been tasked with automating changes in the company’s design system to reflect across applications within the company that may be on different platforms with unique technologies.

By creating an automated process to create platform deliverables from the design file in a GitHub repository, we’ve solved most of my fictional problem.

Now that we have a central GitHub repository with our style dictionary that manages and exports design tokens, we’ll have to finish the problem by finding out the best way for different application to consume the platform deliverables, the exported design tokens for a specific technology.

Put it another way, we need to find a way to deliver design tokens across multiple applications within the fictional company. That way, there will be an automated process to reflect changes in a design file across an entire company.

This article will be less of a tutorial like the previous one and more of a discussion on best practices. Although, I’ll have some code samples for each practice to make the discussion more digestible.

Consuming Design Tokens From Style Dictionary With GraphQL

When I came across Shopify’s Polaris design system for the last article, I noticed that they expose a GraphQL API that serves their design tokens.

As a big fan of GraphQL, this was very intriguing, but it also sounded like a lot of unneeded overhead to generate an API to deliver design details.

However, it dawned on me that if you have a style dictionary in a central GitHub repository, we would not have to create our own GraphQL API.

Instead, we can use

GitHub’s GraphQL API to read

the platform deliverables in the output folder of that

repository. In theory, those deliverables that are read could be stored using

a technology’s file system API.

For example, a repository for a Vue application could contain a Node script that fetches its deliverables in the style dictionary using the GitHub GraphQL API and writes them to local files using the Node file system API.

Now, you may be used to calling APIs to load data while your application is running. However, what I am suggesting is calling your API when your application builds, before it runs.

An application builds as you develop locally as well as before new code is deployed to production.

So, where and when should something like the Node script I mentioned above be called?

If it is only called in the deployment process, before new code is deployed to a production site, then there would be no way to access the tokens while developing locally. This would result in the design tokens not being used which doesn’t make sense.

However, if the design tokens are fetched while developing locally and while deploying to production, then there is a risk that the tokens that are deployed do not match what the developer saw locally. This might be a rare timing issue but a risk nonetheless.

If the design tokens are fetched while developing locally, a script writes them to a folder, and that folder is committed to the origin of the repository, then there won’t be a risk of a discrepancy between local development and the production site.

A risk in this approach is ensuring that all applications in a company have working scripts that read and write the platform deliverables in the central style dictionary. Also, there would be a responsibility to reach each application to integrate this workflow into the development experience.

I can’t possibly iterate through all the possible examples within one platform-specific application, let alone all the various platform-specific applications and technologies that are out there.

However, let me use a React application as an example of how you might integrate the consumption of the design tokens into the development experience.

I have two solutions that came to mind.

Fetching Design Tokens Using Node and Husky

The first solution would be to leverage the prestart NPM script.

As the name implies, the prestart NPM script is triggered before

running npm start.

NPM terms this a “life cycle” script, as it is a script that executes around the life cycle of a “default” script.

We could have prestart call a Node script that fetches the design

tokens via the GitHub GraphAQL API and writes the tokens to a local folder (as

suggested earlier).

Since the Node script would run before each npm start, we could

integrate the workflow to retrieve the design tokens as part of the start-up

process of our application.

This process of running npm start is already natural for local

development as a JavaScript developer. However, there would be an additional

strain on the developer to restart the application when switching between

branches (working on a new feature).

This additional strain could be resolve using Husky, a library that lets you hook into Git event (push, pull, etc.) and run an NPM script.

Specifically, Husky could hook into when a developer changes from one branch to another in the repository. When that happens, the Node script to read and write the design tokens would be called again.

I put together a sample so you can visualize what I’m describing: https://github.com/michaelmang/consume-style-dictionary-node/commits/master

Sourcing Design Tokens With Gatsby

The second solution is to source from the GitHub GraphQL API in a Jamstack ecosystem, such as Gatsby.

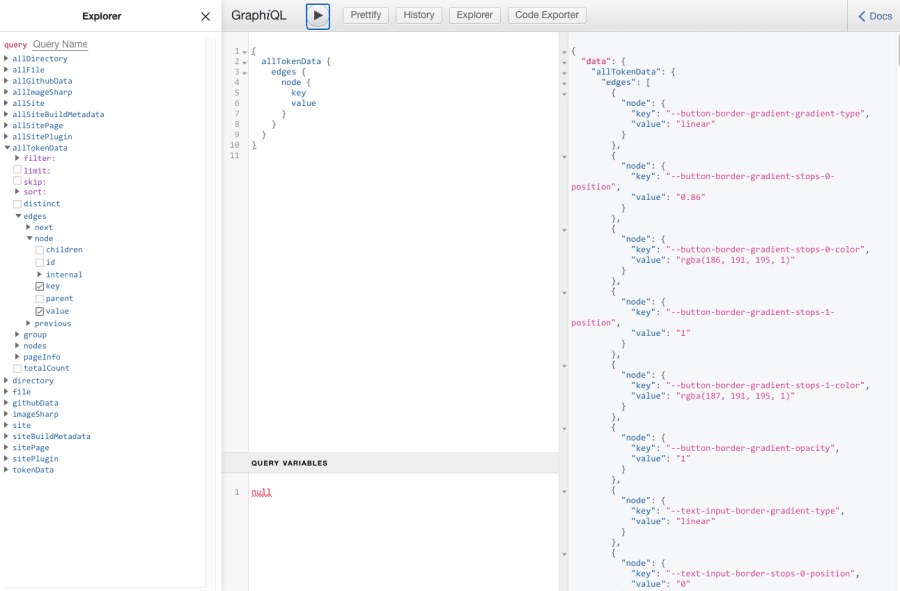

You could have the Gatsby application source from the GitHub API which reads from the style dictionary repository. The design tokens then are available via Gatsby’s GraphQL data layer.

With the help of a plugin called

gatsby-source-github-api, I can read the contents of a platform deliverable file from the style

dictionary, like _variables.css

As you can see in the graphic above, the contents come back as plain text.

With some additional configuration with Gatsby, we’ll be able to transform this plain text into a better format.

Gatsby exposes a gatsby-node.js file to

do things with their GraphQL data layer during the build lifecycle.

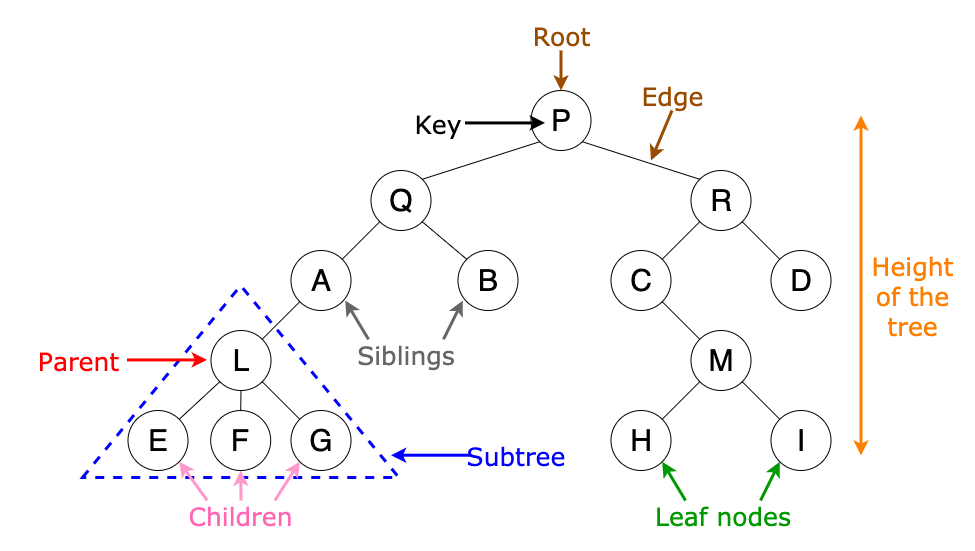

There are two ways in which “node” can be understood in Gatsby. First, the

gatsby-node.js does allow us to write code to do things with

their data layer using Node. Second, “node” refers to the “data tree.” A data

tree is a way of visualizing the organization of data in computer science:

In Gatsby, the data layer (which you can explore and query using the GraphQL explorer that is launched when developing a Gatsby application locally) consists of “nodes.”

In the graphic of the GraphQL explorer that I posted above, there is a node representing each result from the GraphQL query of the GitHub API:

{

allGithubData {

nodes {

rawResult {

data {

repository {

content {

entries {

object {

text

}

}

}

}

}

}

}

}

}

In the query above, the text is the data within the node.

Gatsby exposes APIs that can be used in the

gatsby-node.js file to transform the data in the GitHub node.

Specifically, we could use the

onCreateNode API

to transform the text node, which is the platform deliverable

represented as plain text, into multiple nodes representing the design tokens:

Here’s how I implemented it: https://github.com/michaelmang/consume-style-dictionary-gatsby/commits/master

With this approach, you wouldn’t need to copy the design tokens locally as the design tokens should be available when you build the application both locally and before the deployment. This doesn’t prevent the timing issue I mentioned earlier, but it is a pleasant pairing.

The design tokens could be queried from a component using Gatsby’s built-in tooling.

Thoughts On This Approach

First, bear in mind that GraphQL may not be the best means of communicating data across the range of platforms and technologies that the style dictionary supports. For that reason, you could use GitHub’s REST API. All the pros and cons of this approach would remain.

Second, if the transforming of the text into individual design token nodes is

overkill (and it very well maybe), you could alternatively write the text to a

CSS file. This would also be done in

gatsby-node.js using the Node file system API.

Finally, while it was fun to create the code samples for these approaches (especially the Gatsby transformer plugin), I think better approaches come when you shift the paradigm from your applications “consuming” the deliverables in style dictionary to style dictionary “delivering” its deliverables.

Delivering Design Tokens

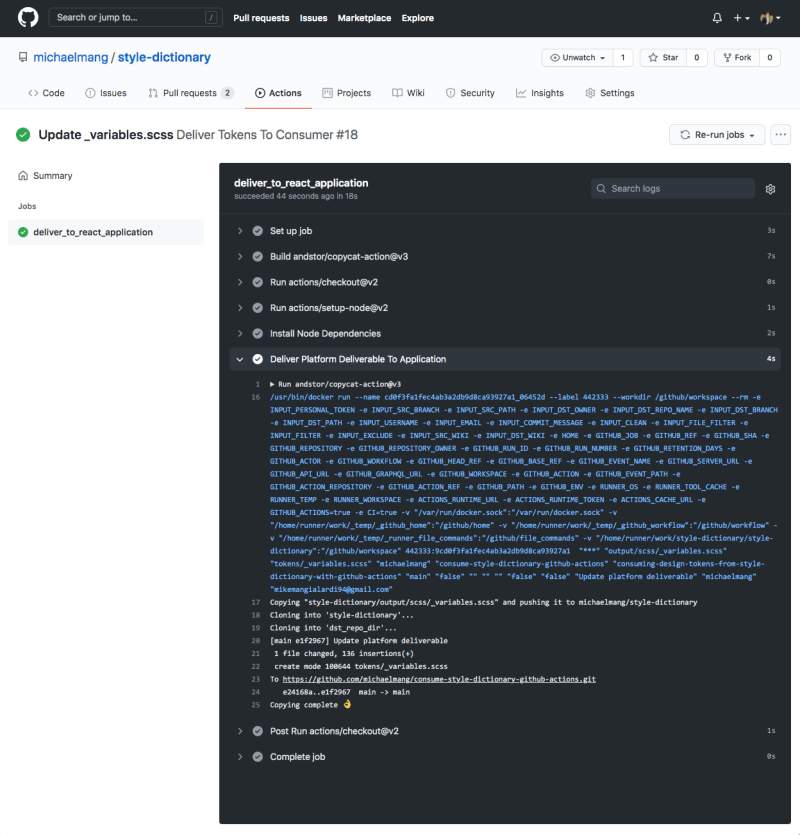

In the previous article, I created a GitHub Actions workflow in my

style-dictionary repository:

You can view that workflow here: https://github.com/michaelmang/style-dictionary/blob/exporting-design-tokens-from-figma-with-style-dictionary/.github/workflows/export-tokens-on-input.yml

This workflow is triggered whenever a new design tokens file is received from Figma.

This workflow builds the JSON file representing the design tokens from Figma using Style Dictionary and commits the platform deliverables that were generated.

These generated platform deliverables were stored in an

output folder.

We can chain a new workflow off of this previous workflow that is triggered

whenever the output folder has been updated with new content.

When this happens, we’ll execute some actions that copy a file from the

output folder and commits it to the repository of an application

that is consuming the central style dictionary.

Since each application will only be interested in receiving a specific type of platform deliverable that was generated by Style Dictionary, we can create a separate “job” for each application.

Jobs in a GitHub Actions run in parallel by default.

So, on the push of new platform deliverables, each application that needs to consume one of these deliverables will have a job in the workflow to copy the needed deliverable to its repository.

This indeed means that for every new application that consumes the style dictionary, we would have to add a new job in the workflow.

However, we already have to update the Style Dictionary config every time we want it to generate a new platform deliverable. We’re just adding a step to the process.

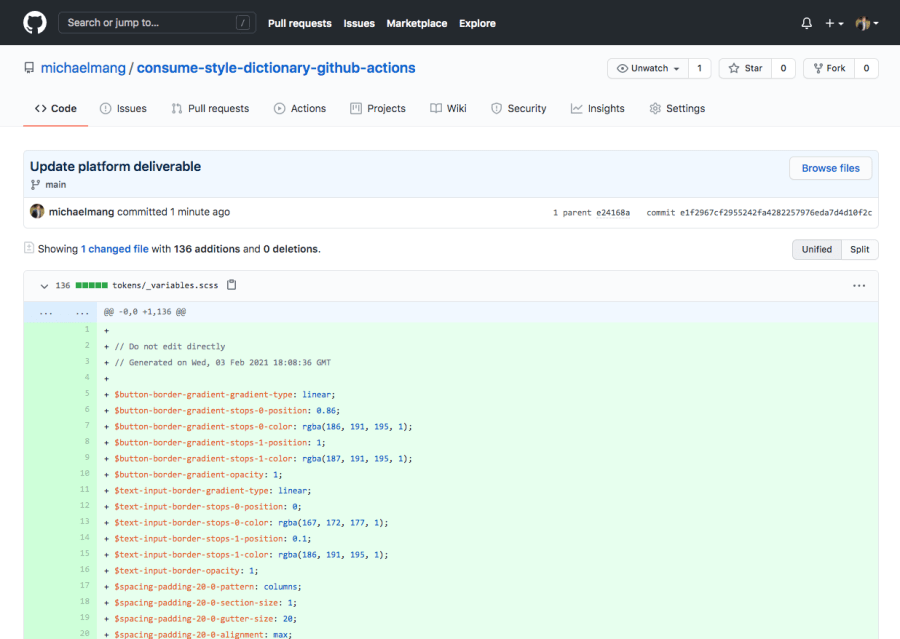

Here’s an example of me copying the

_variables.scss deliverable generated by Style Dictionary to a

React application:

https://github.com/michaelmang/style-dictionary/pull/3

And, the example application repository that the deliverable was copied to: https://github.com/michaelmang/consume-style-dictionary-github-actions

I leveraged a pre-defined action step for copying the deliverable to another repository.

Thoughts On This Approach

The only caveat was that it required a Linux virtual environment. However, it probably isn’t too difficult to write your own action step if that’s a problem.

Another caveat was that it uses HTTPS to clone and push to your repositories, so you’ll need to make sure the remote URL for the consuming is HTTPS and not SSH.

Overall, I think it is a much better option to think of delivering/shipping the platform deliverables to all the consuming applications, rather than having all the applications manage to consume the platform deliverables.

This approach also leaves a record of all the consuming applications as they each have their own job documented in the workflow.

Conclusion

Congratulations, you have all the skills necessary to automate the process of taking a design file and delivering the design tokens across as many applications, whatever the platform and whatever the technology. 🎉

Perhaps there are other ways to solve the automation problem. Or, maybe you have a better idea to inform a best practice, especially given that these problems have a much bigger scale than an article can cover.

A coworker once told me that the best part of coding was when you get to refactor. There’s some truth to that (as long as it isn’t a painful refactor of legacy code). I hope to not have given you all the solutions in this article, nor the best practices for every scenario, but some skills and inspiration to have fun refactoring the design tokens process.

In the next article, we'll learn how to generate design token theme shades with Style Dictionary.

Pow, share, and discuss.